POSETTE 2024 is a wrap! 💯 Thanks for joining the fun! Missed it? Watch all 42 talks online 🍿

Citus is a distributed database that is built entirely as an open source PostgreSQL extension. In fact, you can install it in your PostgreSQL server without changing any PostgreSQL functionality. Citus simply gives PostgreSQL additional superpowers.

Being an extension also means we can keep adding new Postgres superpowers at a high pace. In the last release (11.0), we focused on giving you the ability to query from any node, opening up Citus for many new use cases, and we also made Citus fully open source. That means you can see everything we do on the Citus GitHub page (and star the repo if you’re a fan 😊). It also means that everyone can take advantage of shard rebalancing without write-downtime.

In the latest release (11.1), our Citus database team at Microsoft improved the application’s experience and avoided blocking writes during important operations like distributing tables and tenant isolation. These new capabilities built on the experience gained from developing the shard rebalancer, which uses logical replication to avoid blocking writes. In addition, we made the shard rebalancer faster and more user-friendly; also, we prepared for the upcoming PostgreSQL 15 release. This post gives you a quick tour of the major changes in Citus 11.1, including:

- distribute Postgres tables (scale out Postgres!) without blocking writes

- isolate tenants without blocking writes

- increase shard count by splitting shards without blocking writes

- rebalance the cluster in the background without having to wait for it

- PostgreSQL 15 support

If you want to know the full details of what changes in Citus 11.1, check out our Updates page. Also check out the end of this blog post for details on the Citus 11.1 release party which will include 3 live demos.

Distribute tables without interruption using create_distributed_table_concurrently

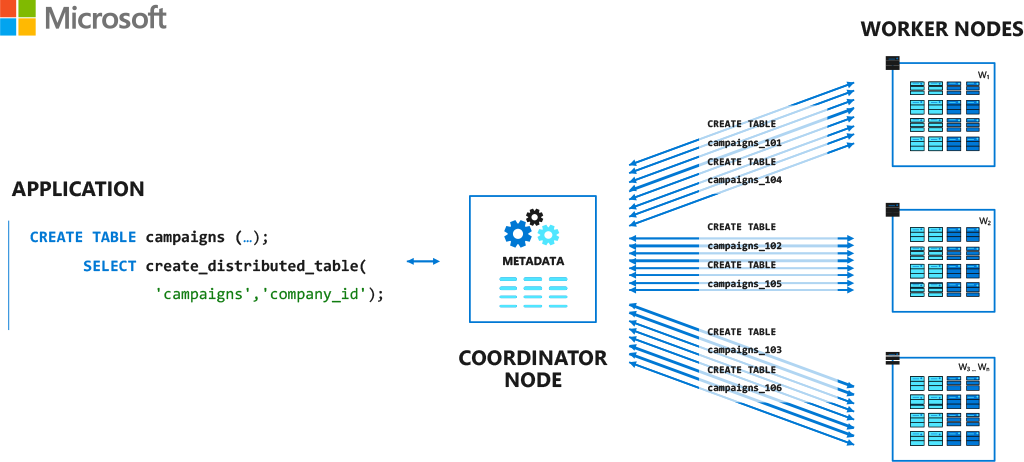

If there’s one thing you need to know when you want to start to scale out Postgres using Citus, it’s that you can distribute tables using the Citus UDF:

SELECT create_distributed_table('table_name',

'distribution_column');

When you call the create_distributed_table function, Citus distributes your data over a set of shards (regular PostgreSQL tables) which can be placed on a single node or across the worker nodes of a multi-node cluster. After that Citus immediately starts routing & parallelizing queries across the shards. If you do not call create_distributed_table, Citus does not change the behaviour of PostgreSQL at all, so you can still run any PostgreSQL workload against your Citus coordinator.

To make the experience of distributing your tables as seamless as possible, create_distributed_table immediately copies all the data that was already in your table into the shards, which means you do not have to start from scratch if you already inserted data into your PostgreSQL table. To avoid missing any writes that are going on concurrently, create_distributed_table blocks writes to the table while copying the data.

Citus 11.1 introduces the ability to distribute a table without blocking writes using a new function called: create_distributed_table_concurrently.

Similar to CREATE INDEX CONCURRENTLY, you can only run one create_distributed_table_concurrently at a time, outside of a transaction, but your application will continue running smoothly while the data is being distributed. Similar to the Citus shard rebalancer, create_distributed_table_concurrently builds on top of logical replication to send the writes that happen during the data copy into the right shards.

create_distributed_table_concurrently. The application can continue writing to the table while the table is distributed, only seeing a brief blip in latency at the end.Distributing tables across a cluster without blocking writes is quite a magical process, but there are a few limitations to keep in mind:

- If you do not have a primary key / replica identity on your table, update & delete commands fail during the

create_distributed_table_concurrentlydue to limitations on logical replication. We are working on a patch proposed for PostgreSQL 16 to address this. - You cannot use

create_distributed_table_concurrentlyin a transaction block, which means you can only distribute one table at a time (but time-partitioned tables work!) - You cannot use

create_distributed_table_concurrentlywhen the table is referenced by a foreign key or is referring another local table (but foreign keys to reference tables work! And you can create foreign keys to other distributed tables afterwards)

Isolate large tenants in a multi-tenant app without interruption

Citus is often used for multi-tenant apps which distribute and co-locate all the tables by tenant ID. In some cases, one of the tenants is much larger than others, causing imbalance in the cluster.

The low-level logical replication infrastructure that powers create_distributed_table_concurrently can also be used for isolating large tenants into their own shard without downtime. The isolate_tenant_to_new_shard is an existing function which splits a single hash value, usually corresponding to a single tenant in a table distributed by tenant ID, into its own shard on the same node. That way, the Citus shard rebalancer can distribute the data more evenly, and you can also move the tenant to its own node.

Prior to Citus 11.1, isolate_tenant_to_new_shard blocked writes for the duration of the operation, but you do not really want to interrupt writes in your application for your most important tenant. In Citus 11.1, the same function becomes an operation that allows writes to continue until the end:

-- isolate tenant 293 to its own shard group

select isolate_tenant_to_new_shard('observations', 293);

After the tenant isolation operation, the rebalancer can move the tenant separately from other shards, allowing the cluster to be balanced. You can also use the citus_move_shard_placement function to move the tenant to its own node, or define a custom rebalance strategy.

Increase the shard count in large clusters by splitting shards without interruption

The low-level infrastructure behind both create_distributed_table_concurrently and isolate_tenant_to_new_shard is called “shard split”.

The shard split infrastructure is more general and exposed via the citus_split_shard_by_split_points function, which splits a shard group (a set of co-located shards) into arbitrary hash ranges placed on different nodes.

One of the things you can do with citus_split_shard_by_split_points is increase the shard count in very large clusters with precise control over where the new shards are placed. By default, Citus creates distributed Postgres tables with 32 shards—allowing tables to be spread over up to 32 nodes. When you outgrow this limit, it is good to have a way to split shards without having to take any downtime:

-- split the shard group to which shard 102008 belongs into two pieces on node 2 and 3:

-- (see the nodeid column in the pg_dist_node catalog table)

select citus_split_shard_by_split_points(

shardid,

/* pick the split points, in this case we take the middle of the hash range*/

array[(shardminvalue::int + (shardmaxvalue::int - shardminvalue::int) / 2)::text],

/* send first half to node 2, second half to node 3 */

array[2, 3],

shard_transfer_mode := 'auto')

from pg_dist_shard

where shardid = 102008; /* shard to split (along with all co-located shards) */

Having the ability to split shards can also be a lifesaver when individual shard groups start to outgrow a single node. In the future, we plan to provide a more convenient, and automated API for shard splitting, but having the low-level function can already help in case of emergency.

Shard splits are built on top of logical replication. When logical replication is active, update/delete queries fail if there is no replica identity (e.g. primary key). By default the split and isolate functions throw an error if one of the tables being split does not have a replica identity, to protect against accidental downtime. The good news is that the type of table that does not have a primary key is usually not the type of table that is frequently updated. You can allow the split to proceed with logical replication by passing the shard_transfer_mode := 'force_logical' argument. You can also block all the writes for the duration of the split shard_transfer_mode := 'block_writes', which skips logical replication steps and can be a bit faster.

We will share more details on how we made logical replication work for shard splits in a future blog post.

Faster shard rebalancing in the background

Scaling out a Citus database cluster is traditionally a two-step process:

- Add a new node to the Citus cluster

- Rebalance the shards by calling a SQL function, so far this was

rebalance_table_shards()

Rebalancing moves shard groups between nodes using logical replication to avoid blocking writes.

There are a few reasons we ask you to do the rebalance separately. One of them is to guard against cases where some of your tables do not have primary keys and setting up logical replication would cause update/deletes to fail. Another reason is that you may want to rebalance at a quieter time of day to make sure it does not compete for resources with peak load.

One of the more annoying aspects of rebalancing so far was that the rebalance_table_shards function could take a long time to complete, and you had to keep PostgreSQL connection open the whole time, and check if something failed, which can occasionally happen during long-running data moves. Luckily, Citus 11.1 introduces a new way of running the shard rebalancer that places the individual shard moves dedicated background job queue, which lets you fire and forget.

When you call the new citus_rebalance_start function in 11.1, a rebalance job is scheduled which can consist of multiple shard group move tasks to get the cluster into a balanced state. Each move is retried several times in case of failure, and you can track the progress in the task queue, and easily cancel the job.

-- Kick off a rebalance job, completes immediately and returns the job ID

SELECT citus_rebalance_start();

┌───────────────────────┐

│ citus_rebalance_start │

├───────────────────────┤

│ 6 │

└───────────────────────┘

(1 row)

-- view the job status

SELECT state, job_type, started_at, finished_at FROM pg_dist_background_job WHERE job_id = 6;

┌─────────┬───────────┬───────────────────────────────┬─────────────┐

│ state │ job_type │ started_at │ finished_at │

├─────────┼───────────┼───────────────────────────────┼─────────────┤

│ running │ rebalance │ 2022-09-18 17:14:35.862386+02 │ │

└─────────┴───────────┴───────────────────────────────┴─────────────┘

(1 row)

-- inspect the individual move tasks

-- (blocked tasks are waiting for the running move)

SELECT task_id, status, command FROM pg_dist_background_task WHERE job_id = 6 ORDER BY task_id DESC;

┌─────────┬─────────┬───────────────────────────────────────────────────────────────────────────────────────────────┐

│ task_id │ status │ command │

├─────────┼─────────┼───────────────────────────────────────────────────────────────────────────────────────────────┤

│ 52 │ blocked │ SELECT pg_catalog.citus_move_shard_placement(102049,'wdb1.host',5432,'wdb3.host',5432,'auto') │

│ 51 │ running │ SELECT pg_catalog.citus_move_shard_placement(102047,'wdb2.host',5432,'wdb4.host',5432,'auto') │

│ 50 │ done │ SELECT pg_catalog.citus_move_shard_placement(102048,'wdb1.host',5432,'wdb4.host',5432,'auto') │

│ 49 │ done │ SELECT pg_catalog.citus_move_shard_placement(102046,'wdb2.host',5432,'wdb3.host',5432,'auto') │

└─────────┴─────────┴───────────────────────────────────────────────────────────────────────────────────────────────┘

-- wait for a job to finish

select citus_job_wait(6);

-- cancel an ongoing job

select citus_job_cancel(6);

In case you prefer the existing behaviour (e.g. you have a script that relies on rebalance completing), you can also keep using the existing rebalance_table_shards function.

In addition to rebalancing in the background, we also made some significant performance and reliability improvements, making for a much better rebalancer experience.

PostgreSQL 15 support!

With Citus, you get a distributed database that allows you to use the hugely versatile, battle-tested feature set of PostgreSQL at any scale. More importantly, when a new PostgreSQL version comes out, packed with new improvements, we bring them to you immediately.

PostgreSQL 15 is around the corner, and we already made sure that Citus 11.1 works smoothly with the latest beta 4 release. Once PostgreSQL 15 GA is released, we will add full support immediately after, in a patch release to Citus. Update October 2022: As promised, Citus 11.1.3 supports PostgreSQL 15 GA.

On the 11.1 Updates page you can find the details of which PostgreSQL 15 features are supported on distributed tables.

All the scale without the downtime

One of the major challenges of having a very large database is that some operations can take a long time. When such operations block some of your Postgres writes, they can interrupt your application for several hours, so they are best avoided. Citus 11.1 gives you a lot more flexibility in managing large (and small!) databases by letting you distribute tables, split shard groups, and isolate tenants while continuing to accept writes. Moreover, you can rebalance your Citus database cluster online in the background, without having to wait.

We look forward to seeing what you will build using Citus 11.1 and PostgreSQL 15. You can learn more, drop a star ⭐ to show your support, or file any issues you run into on the Citus GitHub repo. And if you use Citus on Azure, please know our team is already doing the work to make Citus 11.1 available on the Azure managed service soon.

If you’re just getting started with Citus, here are some resources to get you started distributing Postgres!

- Download page

- Citus docs

- Getting Started page - with a curated set of useful links to docs, videos, blogs, & more for both the open source bits as well as the Citus managed service in the cloud.

Watch replay of Citus 11.1 release party livestream

Watch the Citus 11.1 release event replay with engineers from the Citus team at Microsoft talking through the new release and demonstrating how you can now distribute Postgres tables, split shards, and isolate tenants—without interruption.

- Watch the recording of the Citus 11.1 Release Party on YouTube